Digital twin for battery systems: Cloud BMS with online State-of-Charge and State-of-Health estimation

This post is a review of “Digital twin for battery systems: Cloud battery management system with online state-of-charge and state-of-health estimation” by Li, Rentemeister, Badeda, Jöst, Schulte, and Sauer.

Can an onboard BMS estimate all cell parameters itself?

With the increase of battery cell number and algorithm complexity, onboard BMS is faced with problems in computation power and data storage for precise estimation and prediction of the battery’s states with model-based algorithms.

I think that sensing is the biggest obstacle to higher-quality monitoring of batteries with many small cylindrical cells: it's impossible (from cost, power draw, and system engineering points of view) to sense the current and temperature on each cell.

For estimating battery (or cell) parameters and subsequently SoH and SoC, it feels to me that it's enough to store relatively infrequent telemetry points (e. g., one point per minute, perhaps switching to one point per second during the periods of load transition, that will happen more often for a battery in a city-driving EV) and for a relatively short time (several full charge-discharge cycles or a week of calendar time, whatever is shorter) and then apply stochastic cell parameter estimator based on an electrical cell model.

The total volume of this data (assuming hundreds of cell telemetry timeseries) is just a few dozens of megabytes, so this volume can be stored easily on modern embedded computers.

A stochastic estimation algorithm might take some minutes on a low-power embedded computer (especially since it needs to share CPU time with the real-time logic of the BMS), but it should be fine because neither SoH nor even SoC estimation needs lower latency, really. However, "some minutes" is just my off-the-cuff guess, and the algorithm (which involves neural network training steps: see “Variational Auto-Regularized Alignment for Sim-to-Real Control”) might take much longer than minutes or be impossible on the embedded hardware at all.

Ageing prognostics and strategy optimization which work based on historical operation data of the battery cells, are difficult to be implemented onboard.

I think it's possible to estimate the remaining battery life and the optimal stoichiometry for 0% and 100% SoC levels onboard using long short-term memory models while keeping very little historical data, such as the proportion of time a battery was used (and the amount of energy cycled) per day and the minimum and maximum levels of SoC achieved during a day. Similar to what the authors of this paper did.

All battery relevant data can be measured and transmitted seamlessly to the cloud platform, which is used to build up the digital twin for battery systems.

For estimating SoC, this will work only for batteries that are constantly or near-constantly connected to the internet, such as energy storage at home or EV taxis that never exit the city.

Batteries should be autonomous

Thinking more globally, I think that truly reliable energy infrastructure should be as autonomous as possible. There are many low-probability but high-impact risks in centralising monitoring and control of the energy infrastructure (including Li-ion batteries) in the clouds. Terrorists can hack into the cloud and disrupt the infrastructure by providing wrong estimates and commands. The Internet can stop working due to a grid blackout (which is exactly the moment when batteries should critically remain operational and reliable). Unless the cloud BMS is meticulously implemented on top of multi-datacenter, multi-cloud computing infrastructure, it's also affected by datacenter and cloud provider outages.

Today, when people replace their cars, they still often choose gasoline cars over electric vehicles because they believe that electric vehicles are less reliable and less predictable than vehicles running on fuel (even though this is not true anymore). It will definitely not help to instil trust in electric technology, either, if we tell people that in order to work reliably, the battery in their vehicle must periodically receive some information from the internet.

Wiring vs. wireless communication between BMS components

Furthermore, the system reliability increases by replacing wiring communication with wireless IoT communication.

The authors don't develop this idea in the paper, so it's not quite clear what they are referring to: the communication between the onboard BMS and the IoT gateway, or the communication between the IoT gateway and the centralised hub, or the cloud itself, via an Ethernet cable to a switch. If they refer to the communication between the onboard BMS and the IoT gateway, I think there are some nuances to the "wired vs. wireless" question, so it's not possible to say that wireless communication is strictly better.

Onboard BMS should detect and report failures itself

Compared with onboard BMS, digital twin for battery systems has potential benefits [...] in early detection of system faults in different levels with big data analysis.

The conclusion from “The Application of Data-Driven Methods and Physics-Based Learning for Improving Battery Safety” is that it's hard to train models to predict cell failures from the operational data because there are too few cell failures and the data to train on. The most promising approach, as it seems to me, is to induce faults during the regular end-of-line Li-ion cell testing and verification process in the factory and to train a failure detecting model on those faults. Then, the model can be deployed on the onboard BMS.

The functions which are required at each time point during operation should also run locally, guaranteeing the system safety. An advanced version of these functions will run in the cloud with advanced algorithms, which provide higher accuracy while requiring high computation power.

In other words, the onboard monitoring and control become a fallback for the cloud BMS. Jacob Gabrielson from AWS advises avoiding fallback for a number of good reasons.

I think that cloud monitoring and analysis of the data from batteries should focus only on fleet management & planning, battery failure alerts, and informing better onboard BMS algorithms. I think we shouldn't directly control batteries from the cloud in real-time.

With different kinds of alarm functions, which can be set up for the original and virtual data points, the operators can be informed by the UI as soon as the fault of the systems is identified, increasing the chances of preventing damaging effects and thus improving the system reliability.

For safety and reliability, I think it's important that the onboard BMS identifies anomalies and failures and sends alerts to the cloud or directly to operators. The sending mechanism can still fail, but with a lower probability. The onboard BMS can also send a message directly to the operator or the user (bypassing the cloud) and physically beep to attract the attention of nearby people.

Visualisation in onboard BMS

Compared with the onboard BMS which has little data visualization opportunities, the browser-based UI in our cloud BMS can provide not only the real-time visualization of the measurement data and internal states of the battery cells but also historical operation data with numerous display types and options, which helps the operators in scheduling maintenance and repair.

The data can be stored locally on an IoT gateway (Raspberry Pi) in an embedded database like SQLite. The IoT gateway also runs an HTTP server which provides API for querying recent battery's telemetry data and the state. Operators can open the local diagnostics interface on their phone or tablets by connecting to the HTTP server in the local network. This approach has been proven to work at Northvolt: our Connected Battery IoT gateway does exactly what I've just described.

Estimating State-of-Charge with an adaptive extended H-infinity filter

To estimate cell State of charge, the authors suggest using an adaptive extended H-infinity filter (AEHF) together with an extended Thevenin equivalent circuit model.

H-infinity filter is an algorithm to estimate a dynamic system state with discrete-time updates akin to Kalman Filter.

Adaptive extended H-infinity filter has shown its advantage compared with the Kalman filters considering the uncertainty in battery dynamic models and noise statistics.

The steps of the H-infinity filter algorithm are matrix manipulations (as well as in Kalman Filter). H-infinity filter is more robust than Kalman Filters because it adds some more matrixes to account for past errors and uncertainty.

Results:

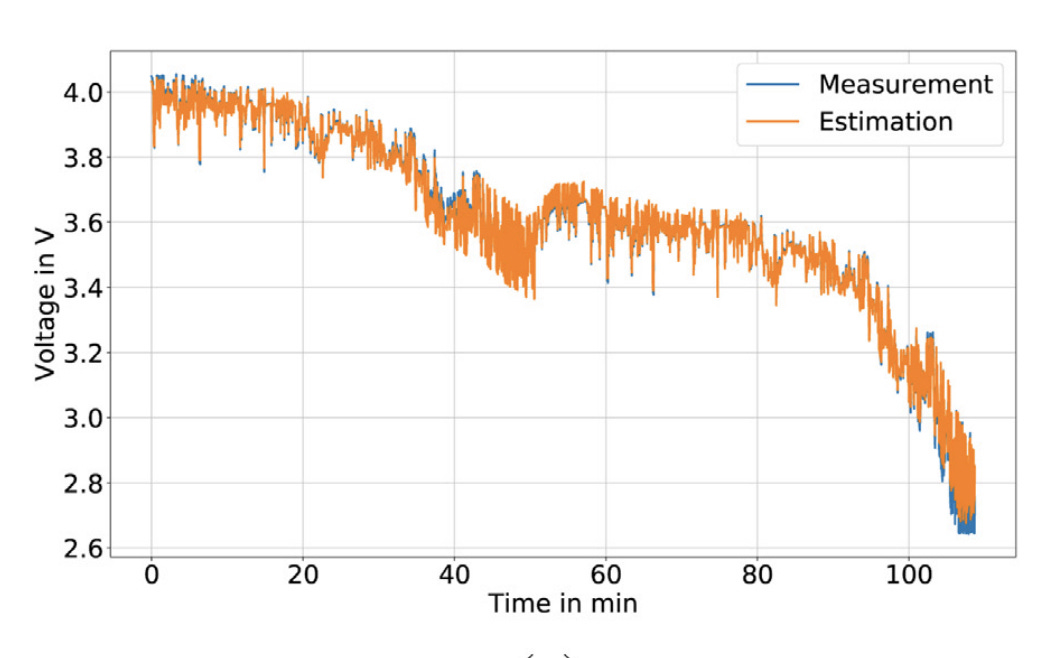

Both the estimation of voltage and SoC converged fast to the reference value. Within 90% depth-of-discharge range, the voltage and SoC estimation of the Li-ion battery can always match with the reference values with mean absolute error of 0.01 V and 0.49%, respectively.

Estimating cell parameters with particle swarm optimisation

Particle swarm optimisation is an optimisation method inspired by movement in a bird flock or fish school.

The authors propose particle swarm optimisation to estimate cell parameters for Thevenin equivalent circuit model and H-infinity filter state-of-charge estimator: cell capacity, cell internal resistance, and diffusion voltage and resistances all at once (but not some other parameters, such as cell open-circuit voltage relationship, Coulombic efficiency, and cell self-discharge rate).

The optimisation field has as many dimensions as there are cell parameters to estimate: six in this case (compare with the two-dimensional field on the picture above).

The objective function is the squared error between the actual measured voltage output the voltage output of the Thevenin equivalent circuit model which uses the proposed set of cell parameters (coordinates of a particle) over some time. In the paper, the authors used a 105-minute window (however, they didn't specify the time step, so it's unclear how many data points are there. But if we assume one the step of 1 second, it's about 6300 points):

The authors also didn't specify how many particles in the swarm did they use.

Result: particle swarm optimisation algorithm converges after 600 iterations and finds the cell capacity with the mean absolute error of 0.74% and the internal resistance with a mean absolute error of 1.7%. This algorithm is also robust to artificially added sensor noise.

I think the results are good and it's essential to estimate all cell parameters at once.

However, the algorithm is very expensive to compute: on each of the 600 steps, for each particle (and we don't know how many particles were used), a 6300-step solution for a system of differential equations (see Thevenin equivalent circuit model) should be solved, and then the squared error against the actual measurements computed. There are apparently no ways to reduce the amount of computation done on all these steps and iterations.

However, during distinct executions of the cell parameter estimation (if they happen once every several minutes or hours, for example), the global optimum point can be initialised with the parameters from the previous execution. It would be interesting to know if this makes the algorithm converge faster than in 600 iterations.

It would be interesting to compare the particle swarm optimisation with a stochastic cell parameter estimator based on an electrical cell model in terms of efficiency and quality.

Conclusion

I think that "digital batteries" projects in the cloud should focus on monitoring, fleet management, capacity and replacement planning, and, probably, end-of-life prognostics. Cloud should not control the batteries directly during the operation.

The onboard BMS should be completely autonomous. The onboard BMS should itself estimate state-of-charge estimation, estimate cell parameters, and detect failures and send alerts.